Table of Contents

Introduction

Game Overview

Cat Connect is a line-connect puzzle game, a popular genre where players create paths to connect matching items without crossing existing paths. In this game, players connect matching colored cats across a predefined grid. Each level consists of a grid containing various colored cats that need to be paired. The objective is to form valid connections between like colours while avoiding boxes that are already part of a path or occupied by other colored cats.

Grid Structure

A grid is a two-dimensional matrix of cells. For example, a 3×3 grid contains nine total cells. Depending on the level design, the system may populate it with 1–3 colored cats. Each coloured cat occupies two cells, and the arrangement determines the complexity of possible paths.

Game Mechanics

The objective of the game is to connect matching cats on a grid by drawing paths between them. Players must create continuous, non-overlapping routes that fill the grid logically without crossing or interfering with other paths. Each move is made by selecting a starting point and extending the path across adjacent cells. Movement is restricted to four directions i.e. up, down, left, and right, ensuring that navigation remains simple, intuitive, and consistent. Successful completion requires the player to use spatial reasoning, plan ahead, and ensure every pair is connected without blocking future moves.

Win/Loss Conditions

- Win: The AI agent successfully connects all required colors and fills all the boxes.

- Loss: The agent becomes stuck and fails to progress. If it reaches a stuck penalty threshold of 10 consecutive failed attempts, the level is marked unsolvable, and the agent moves to the next level.

Note: All experiments were conducted on a system equipped with 32 GB DDR4 RAM, an NVIDIA GTX 1050 Ti (4 GB) GPU, and an Intel Core i5-10400 CPU.

AI TRAINING

Role of the Agent

The AI agent functions as both a solver and an evaluator, designed to intelligently interact with the game grid and support the level-design process. Its primary role is to simulate real player behavior, analyze grid structure, and ensure each level meets expected quality and difficulty standards.

To do this, the agent must understand and operate within the spatial rules of the game. It identifies ‘go’ and ‘no-go’ areas to determine where movement is allowed and where it is restricted. Go areas include all empty, navigable cells the agent can move through when forming connections. No-go areas include any cells already occupied by an existing path or by another color, making them blocked and unusable. Since the agent can only move in four directions i.e. up, down, left, and right, this mapping is essential for avoiding conflicts and ensuring a clean, valid path.

It does so by:

- Grid Solving: Navigates the available paths, overcomes obstacles, and makes strategic decisions to mimic optimal or near-optimal player strategies.

- Go and No-Go Zone Identification: Maps areas where movement is allowed versus blocked, ensuring the grid layout remains coherent and logically structured.

- Playability Validation: Confirms that each level can be completed without dead-ends, impossible routes, or unintended traps.

- Difficulty Assessment: Generates quantitative difficulty scores based on metrics such as attempts, path complexity, and solve time, providing consistent and unbiased evaluation.

- Designer Support: Reduces manual testing effort, minimizes trial-and-error, and replaces subjective judgments with data-driven insights.

Problem Solved by the Agent

The AI agent is designed to tackle two fundamental challenges in level design and evaluation:

- Grid Solving: The agent analyzes the structure of the grid to identify all possible paths between matching elements. It navigates efficiently by avoiding no-go zones, respects movement constraints, and ensures that all connections can be completed without conflicts. This allows the agent to mimic real player strategies and anticipate potential bottlenecks or dead-ends in the level layout.

- Level Validation: Beyond solving, the agent assesses whether a level is genuinely playable. It tests each design against a range of simulated attempts, using threshold-based metrics to evaluate success rates, attempt counts, and path complexity. Levels that fall outside acceptable parameters are flagged, ensuring only solvable, well-balanced levels reach players.

By addressing both of these challenges, the agent provides designers with objective, data-driven feedback, reducing manual testing effort and improving overall level quality.

Solving the grid

The agent solves the grid by trying to match one color to its counterpart. To do this, it first picks a cat randomly then identifies go areas, which are empty cells, and no-go areas, which include cells with other colors or any unavailable paths. If the agent gets stuck on a particular color for a certain amount of time, it automatically switches to the next color (randomly) and continues trying to solve the level. If the agent remains stuck on the level for an extended period, it incurs a stuck penalty and the level is restarted from the beginning. When the agent accumulates 10 consecutive stuck penalties on a level, the level is deemed unsolvable, and the agent moves on to the next level. Throughout this process, the agent tracks success metrics such as win/loss ratios, and number of attempts which inform difficulty assessment and level tagging.

Level evaluation and tagging

Playability Validation

The agent repeatedly plays each level and evaluates its solvability.

- Levels are tested in a circular loop, once all defined levels have been played once, the agent restarts from Level 1. With each new cycle, the fail threshold doubles, giving the agent more room to explore new paths before declaring a level unsolvable.

- If the agent manages to solve a level without exceeding the current fail threshold, the level is marked playable. However, if it accumulates 10 consecutive stuck penalties on a level, the level is tagged as non-playable and the agent moves on.

Difficulty Assignment

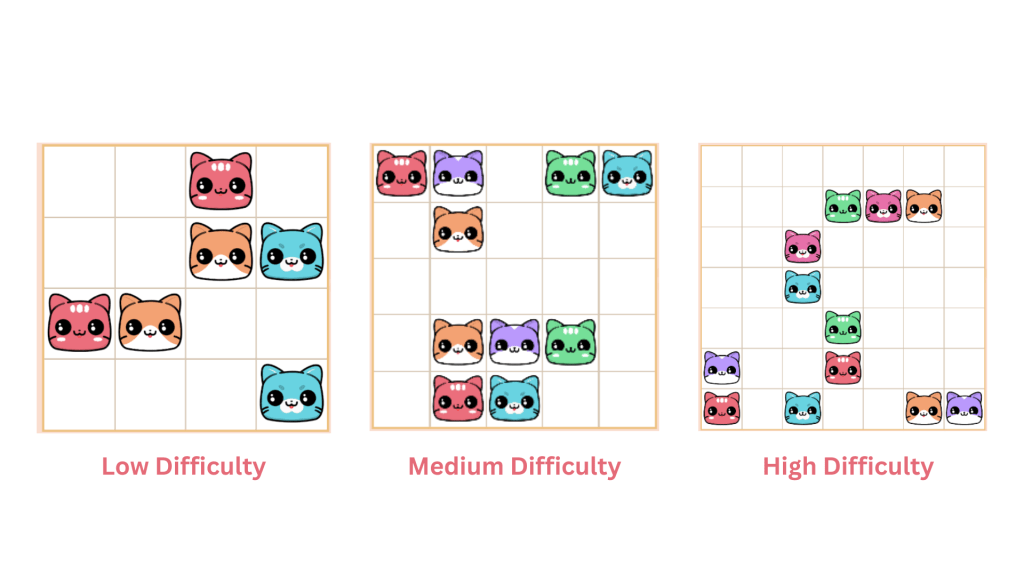

The agent evaluates each level by analyzing success ratios and the number of attempts, then assigns a corresponding difficulty tag:

- Low

- Medium

- High

This ensures levels are classified objectively, helping guide player progression and design decisions.

Future goals: dynamic level generation

With increased computational resources, such as more powerful GPUs, our vision is to:

- Automatically generate new levels on demand, creating fresh and unique puzzles without manual intervention.

- Test and validate levels in real time, ensuring that each generated level is fully playable and meets quality standards.

- Automatically assign difficulty labels, using agent analysis to categorize levels as easy, medium, or hard based on player-like performance metrics.

- Deliver dynamic, endless puzzle content, providing a continuously evolving gameplay experience that adapts to player skill and engagement.

conclusion

The Cat Connect AI framework provides a structured, data-driven approach to evaluating and managing puzzle levels. By using the agent, we reduce reliance on manual testing, ensure levels are consistently playable, and assign difficulty objectively. This not only saves time for designers and developers but also improves the player experience by delivering well-balanced and engaging challenges. With additional computational resources, the system can evolve to generate levels dynamically, offering endless, personalized puzzles that adapt to each player’s skill level.